Delete old database backup files automatically in SQL Server using SQL Server Maintenance plan: SQL Server Maintenance plans are another way of deleting old backup files by using the cleanup task. When connected to the server, expand it and the Management folder after. Then right click on Maintenance Plans and click on Maintenance Plan Wizard.

Introduction

While working with a number of databases, creating a recovery plan can be challenging, especially if we want a foolproof design. Automated backup and restore strategies ensure the recovery plan is successful. However, strategies relying on frequent backups, although part of a sound strategy, can start causing issues when available storage space becomes limited. Old database backups are in many cases unnecessary, and it is often prudent to delete those backups to increase available storage. This can be done manually or automatically. There are several options to delete old backup files automatically in SQL Server:

Delete old database backup files automatically in SQL Server using a SQL Server Agent Job

Delete old database backup files automatically in SQL Server using a SQL Server Maintenance plan

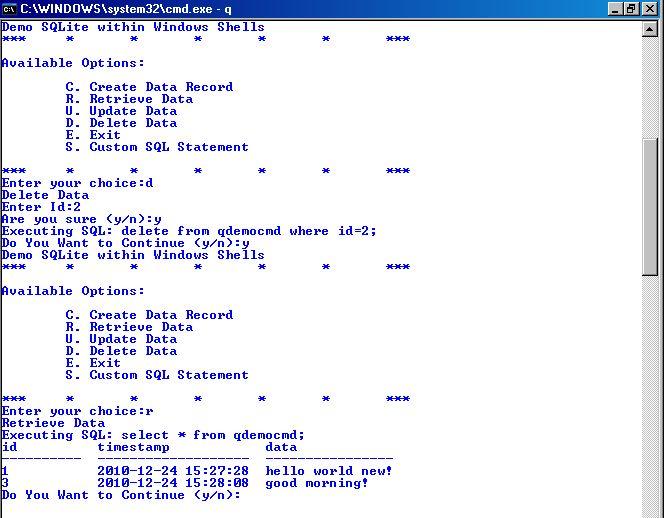

Delete old database backup files automatically in SQL Server using SQL Server Agent:

- You can delete it without corrupting the DB. However, you should try to find who created the dump and why (likely a partial or full backup, though a strange way to do so), and ascertain if it is still needed.

- Errorlog is the file written by SQL Server with general error/informational messages. This should tell you which process is generating the AV errors. You can open that file in notepad to get more info. In the short-term, you can delete files named SQLDumpnnnn.mdmp, since they are not active (though you'll lose the debug info contained in them).

For this option, the SQL Server Agent service must be installed and running. It requires basic knowledge and understanding of Transact-SQL (T-SQL) scripting, but the process is then entirely automated, so there is no further input from the user needed. It is necessary to create a stored procedure first upon which the SQL Server Agent job we make would call to execution. The advantage of this is the ability to use the same stored procedure across different jobs with different input parameters. To create a SQL Server Agent scheduled to delete old backup files, we must do the following steps:

- SQL Server Agent service must be running. In the Object Explorer and check the icon beside SQL Server Agent. Should the message “Agent XPs disabled” is shown beside it, go to Control Panel/System and Security/Administrative Tools and run Services (the exact location may vary due to different operating systems). Locate the SQL Server Agent service for the corresponding SQL Server instance, select it and click on Start in the top left of the window, or simply right click on it and select Start. If the service is already running, you can skip this step:

Create a user stored procedure which will use the input from the SQL Server Agent scheduled job to delete old backup files. Right click on the database upon which we want to act and select New Query:

In the new query window enter the following T-SQL:

As it can be seen in this query, an extended system stored procedure xp_delete_file is being used. It reads the file header to check what type of file it is and will only delete certain types based on the input parameters we choose. After running the query, we should end up with a stored procedure:

After creating the stored procedure, we need to create a scheduled job with SQL Server Agent which will use the stored procedure with our parameters to delete the old backup files.

To do that, right click on SQL Server Agent and select New then Job…

On the General tab, enter a descriptive name and optionally a description for the job:

On Steps tab, go to New…:

In the New Job Step window, under General tab, enter a descriptive job name, under Database select the database which we want the job to work on and under Command: insert the following line:

usp_DeleteOldBackupFiles ‘D:MSSQL_DBBackups’, ‘bak’, 720

To explain the line above:

usp_DeleteOldBackupFiles – calls the stored procedure we created earlier

‘D:MSSQL_DBBackups’ – the first parameter tells the stored procedure where to look

‘bak’ – the second parameter tells what extension or file type to lookNote: for the extension, do not use dot before the extension as the xp_delete_file already takes that into account. ‘.bak’ is incorrect use as opposed to ‘bak’, which is correct use.

720 – the third parameter which tells the stored procedure the number of hours a backup file must be older than to get deleted.

Under Advanced tab, choose what will be done after this step is done successfully. Seeing that this is the only step, we can select ‘Quit the job reporting success’. Here we can also set how many times the step will retry as well as time interval between tries in minutes.

Next tab is Schedules. Under this tab we can set up when will this job work.

Go to New…:

In the new window, enter a descriptive name for the schedule. Also, for Schedule Type check that it is set to Recurring for proper scheduled operation. After that, we can set up the schedule using the options below:

The rest of the tabs is optional in this use case, though, we could use Notifications tab to set up email notifications when the job is completed.

- After completing the previous step, our Job is created. It will run according to schedule, deleting old database backup files as set in the job itself. SQL Server Management Studio by default doesn’t show any real-time notifications when the job is performed. If we want to check the job history, we can right click on the job and select View History:

In the new window we can see the history for the selected job which executed successfully:

Delete old database backup files automatically in SQL Server using SQL Server Maintenance plan:

SQL Server Maintenance plans are another way of deleting old backup files by using the cleanup task.

When connected to the server, expand it and the Management folder after. Then right click on Maintenance Plans and click on Maintenance Plan Wizard

In the Maintenance Plan Wizard, click Next

Enter a descriptive name and, optionally, a description, and in the bottom right click on Change…

When New Job Schedule window pops up, check if the Schedule type is set to Recurring. After that, we can set up the schedule using the options below. After that, click OK.

Check if everything is correct before clicking on Next:

In the next window, check Maintenance Cleanup Task and click on Next:

In the following window, because we have only one task, there is no ordering, so we proceed by clicking on Next:

In the following window:

Under Delete files of the following type: we select Backup files by clicking on the radio button.

Under File location: we select Search folder and delete files based on an extension. Under that, we specify where to search for the folders and what type of an extension to look for. Also, we can check the Include first-level subfolders option if the backups are stored in separate subfolders.

Note: The file extension we input must not contain dot (‘.’) – ‘.bak’ is incorrect, ‘bak’ is correct

Under File age: we check the option Delete files based on the age of the file at task run time and specify the age of the files below

After checking that everything is correct, we proceed by clicking Next:

In the next window, we may select that a report is written and/or mailed to the email address we input every time the Maintenance plan runs.

In the next window we press Finish to complete the creation of our Maintenance Plan. After that we can check under Management → Maintenance Plans for our newly created plan:

Downloads

Please download the script(s) associated with this article on our GitHub repository

Related posts:

To be useful, backups must be scheduled regularly. A full backup (a snapshot of the data at a point in time) can be done in MySQL with several tools. For example, MySQL Enterprise Backup can perform a physical backup of an entire instance, with optimizations to minimize overhead and avoid disruption when backing up InnoDB data files; mysqldump provides online logical backup. This discussion uses mysqldump.

Assume that we make a full backup of all our InnoDB tables in all databases using the following command on Sunday at 1 p.m., when load is low:

The resulting .sql file produced by mysqldump contains a set of SQL INSERT statements that can be used to reload the dumped tables at a later time.

This backup operation acquires a global read lock on all tables at the beginning of the dump (using FLUSH TABLES WITH READ LOCK). As soon as this lock has been acquired, the binary log coordinates are read and the lock is released. If long updating statements are running when the FLUSH statement is issued, the backup operation may stall until those statements finish. After that, the dump becomes lock-free and does not disturb reads and writes on the tables.

It was assumed earlier that the tables to back up are InnoDB tables, so --single-transaction uses a consistent read and guarantees that data seen by mysqldump does not change. (Changes made by other clients to InnoDB tables are not seen by the mysqldump process.) If the backup operation includes nontransactional tables, consistency requires that they do not change during the backup. For example, for the MyISAM tables in the mysql database, there must be no administrative changes to MySQL accounts during the backup.

Full backups are necessary, but it is not always convenient to create them. They produce large backup files and take time to generate. They are not optimal in the sense that each successive full backup includes all data, even that part that has not changed since the previous full backup. It is more efficient to make an initial full backup, and then to make incremental backups. The incremental backups are smaller and take less time to produce. The tradeoff is that, at recovery time, you cannot restore your data just by reloading the full backup. You must also process the incremental backups to recover the incremental changes.

To make incremental backups, we need to save the incremental changes. In MySQL, these changes are represented in the binary log, so the MySQL server should always be started with the --log-bin option to enable that log. With binary logging enabled, the server writes each data change into a file while it updates data. Looking at the data directory of a MySQL server that has been running for some days, we find these MySQL binary log files:

Delete Sql Dump Files Free

Each time it restarts, the MySQL server creates a new binary log file using the next number in the sequence. While the server is running, you can also tell it to close the current binary log file and begin a new one manually by issuing a FLUSH LOGS SQL statement or with a mysqladmin flush-logs command. mysqldump also has an option to flush the logs. The .index file in the data directory contains the list of all MySQL binary logs in the directory.

The MySQL binary logs are important for recovery because they form the set of incremental backups. If you make sure to flush the logs when you make your full backup, the binary log files created afterward contain all the data changes made since the backup. Let's modify the previous mysqldump command a bit so that it flushes the MySQL binary logs at the moment of the full backup, and so that the dump file contains the name of the new current binary log:

After executing this command, the data directory contains a new binary log file, gbichot2-bin.000007, because the --flush-logs option causes the server to flush its logs. The --master-data option causes mysqldump to write binary log information to its output, so the resulting .sql dump file includes these lines:

Because the mysqldump command made a full backup, those lines mean two things:

The dump file contains all changes made before any changes written to the

gbichot2-bin.000007binary log file or higher.All data changes logged after the backup are not present in the dump file, but are present in the

gbichot2-bin.000007binary log file or higher.

On Monday at 1 p.m., we can create an incremental backup by flushing the logs to begin a new binary log file. For example, executing a mysqladmin flush-logs command creates gbichot2-bin.000008. All changes between the Sunday 1 p.m. full backup and Monday 1 p.m. are written in gbichot2-bin.000007. This incremental backup is important, so it is a good idea to copy it to a safe place. (For example, back it up on tape or DVD, or copy it to another machine.) On Tuesday at 1 p.m., execute another mysqladmin flush-logs command. All changes between Monday 1 p.m. and Tuesday 1 p.m. are written in gbichot2-bin.000008 (which also should be copied somewhere safe).

The MySQL binary logs take up disk space. To free up space, purge them from time to time. One way to do this is by deleting the binary logs that are no longer needed, such as when we make a full backup:

Sql Server Delete File

Deleting the MySQL binary logs with mysqldump --delete-master-logs can be dangerous if your server is a replication source server, because replicas might not yet fully have processed the contents of the binary log. The description for the PURGE BINARY LOGS statement explains what should be verified before deleting the MySQL binary logs. See PURGE BINARY LOGS Statement.